Conveya had a survey maker that allows users to send a connected survey to employee's and their supervisors. The client wanted a survey reminder tool to ease the admin burden. The research found that they would most likely repeat the admin cylcle. This is a case study on what we did to elimiate time wasted on admin work.

Lead designer working alongside development team, project managers and platform users.

Lead pre-project research efforts such as surveys, group studies, customer feedback and data analytics

Created wireframes, then high-fidelity prototypes of the plain-language rule builder.

Led the pilot roll-out, monitored usage analytics, and produced a post-launch optimisation plan.

Defined success metrics (set-up time, error rate, admin hours reduced) with stakeholders.

Clients want Conveya to handle pulse-check surveyson the platform. The platform must listen learner start dates already stored in the system, schedule surveys every three months since they've started, show a running “due soon” list on a dashboard, and send connected surveys to each learner and their manager.

Clients regularly ask for help with recurring admin tasks like pulse checks, progress nudges, and data updates based on events. These requests show up across our Jira board and support tickets, pulling time from Conveya’s internal team to respond, build workarounds, or manually trigger actions. Admin burden for Conveya also increases based on the growth of the company

Admin chores is increasing for both clients and internal admin team. We aim to explore how we could reduce the growing admin workload shared across clients and Conveya’s internal team. We need to investigate common patterns, pain points, and opportunities to ease these tasks without relying on manual fixes or workarounds.

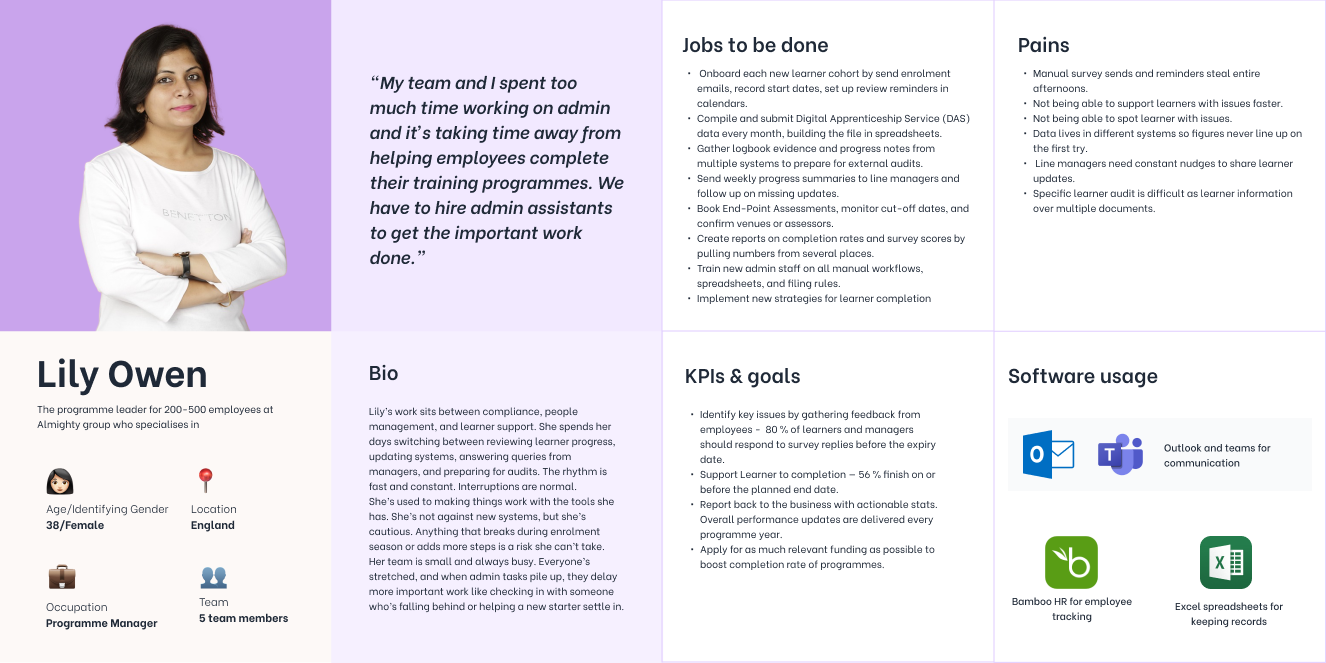

Lily Owens is a programme manager who looks after 200–500 learners at a time while juggling survey deadlines, DAS reports, and audit prep. This persona reflects the kind of user who’s overwhelmed by admin and under pressure to deliver results. A large amount of what they do requires communication between the employees, the wide business, the training course providers and the employee's managers.

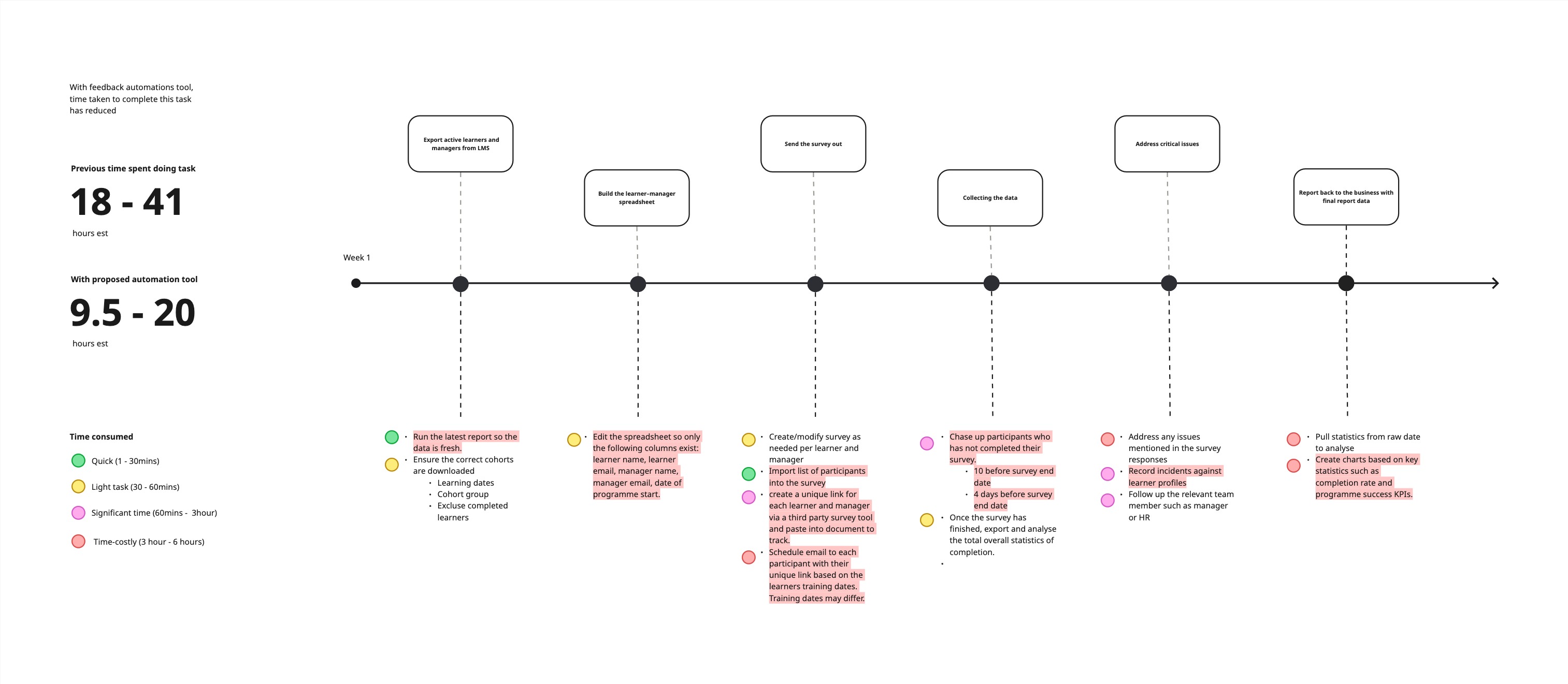

From speaking to the clients we picked up the entire process is actually quite time consuming and mind numbing. For example, Every quarter the client sends unique surveys to 100 learners and 100 managers. Colour-coded task analysis put the cycle at 18–41 hours which is a drain on time which can be spent in helping learners complete their learning programme. Users have found this process tedious and have long requested this feature.

The team team and I gathered client quotes, Jira tickets, and internal feedback to understand where admin work was piling up. I ran a hort ideation workshop, mapping problems and surfacing ideas through sticky notes and dot voting. As we worked through the themes, we noticed that many ideas across different problems and kept pointing to the same thing: tasks were repetitive, rules were predictable.. That’s when automation started to emerge, not as a feature request, but as a natural response to the patterns we were seeing.

.jpg)

To make setup fast and reduce cognitive load, we recorded a limited set of possible end points for each automation. These fields are pulled from existing employee records, feedback scores, or programme dates. By narrowing the options, users can build rules quickly without worrying about pulling the wrong field.

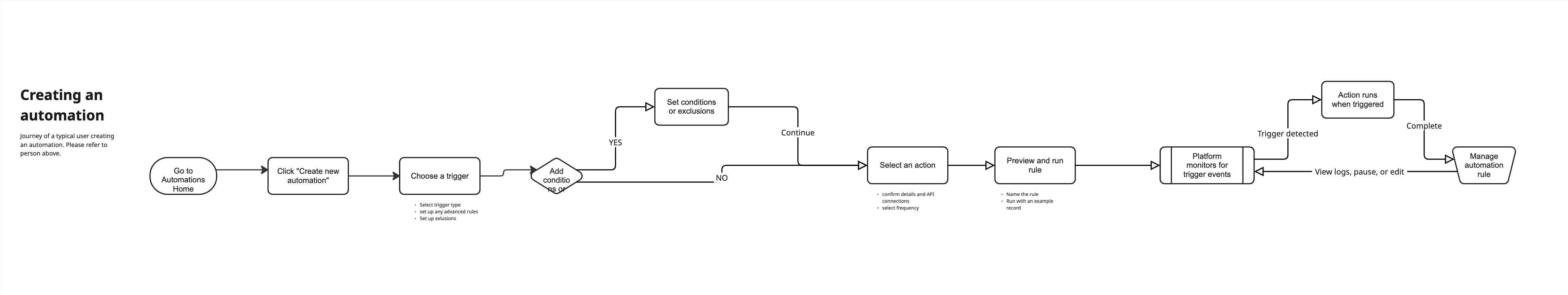

From there, I translated the use cases into flow diagrams to map out how each rule would behave. These diagrams helped clarify where data would come from, what conditions needed to be met, and what the user could expect at each step. I worked closely with engineers during this phase to make sure the logic was viable and wouldn’t break under real-world scenarios like missing fields or duplicate entries.

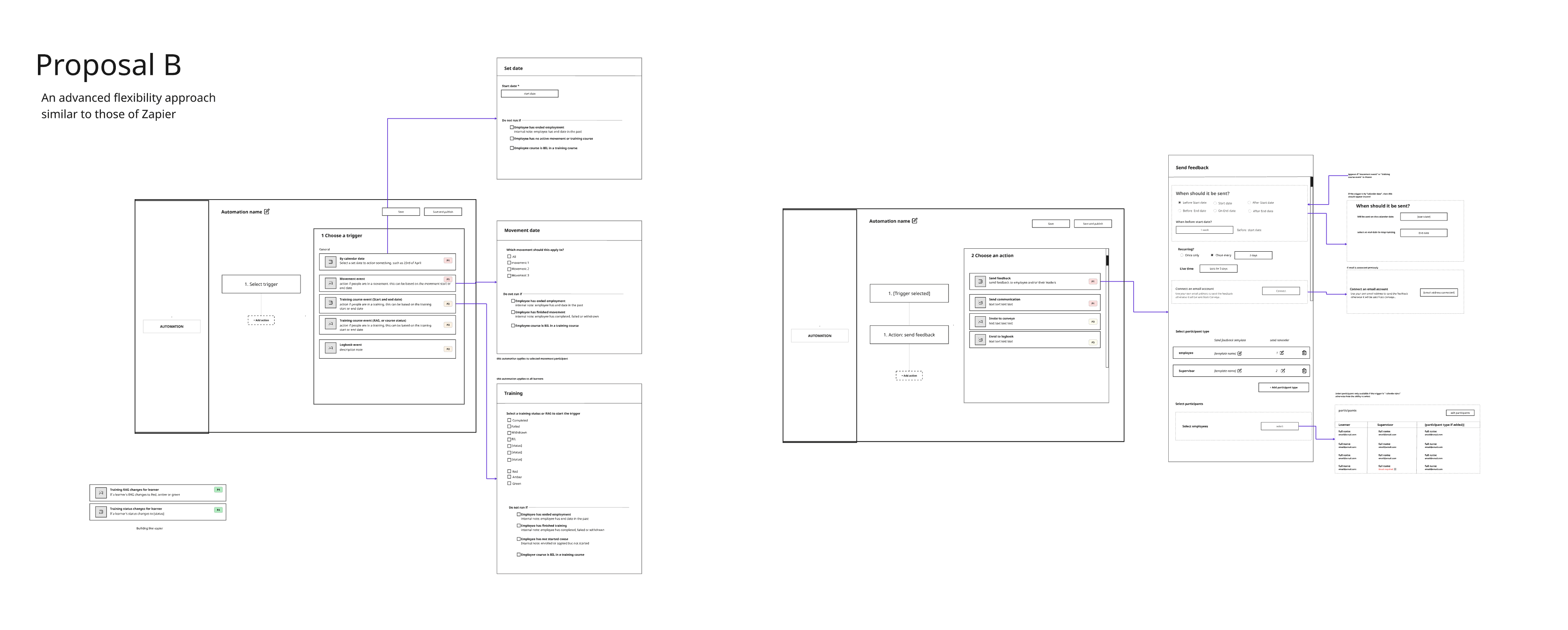

To bring clients back into the process, I shared a short walkthrough of the concept using screenshots and early wireframes. I kept the focus on how this would reduce repetitive admin and give them more control over tasks they were already doing. The feedback was immediate as clients recognised the use cases straight away and many replied with examples of their own flows they’d want to automate. One even said, “We’ve been manually doing this for over a year, I didn’t realise it could be this simple.” however users requested a lot more flexibility with what they can do.

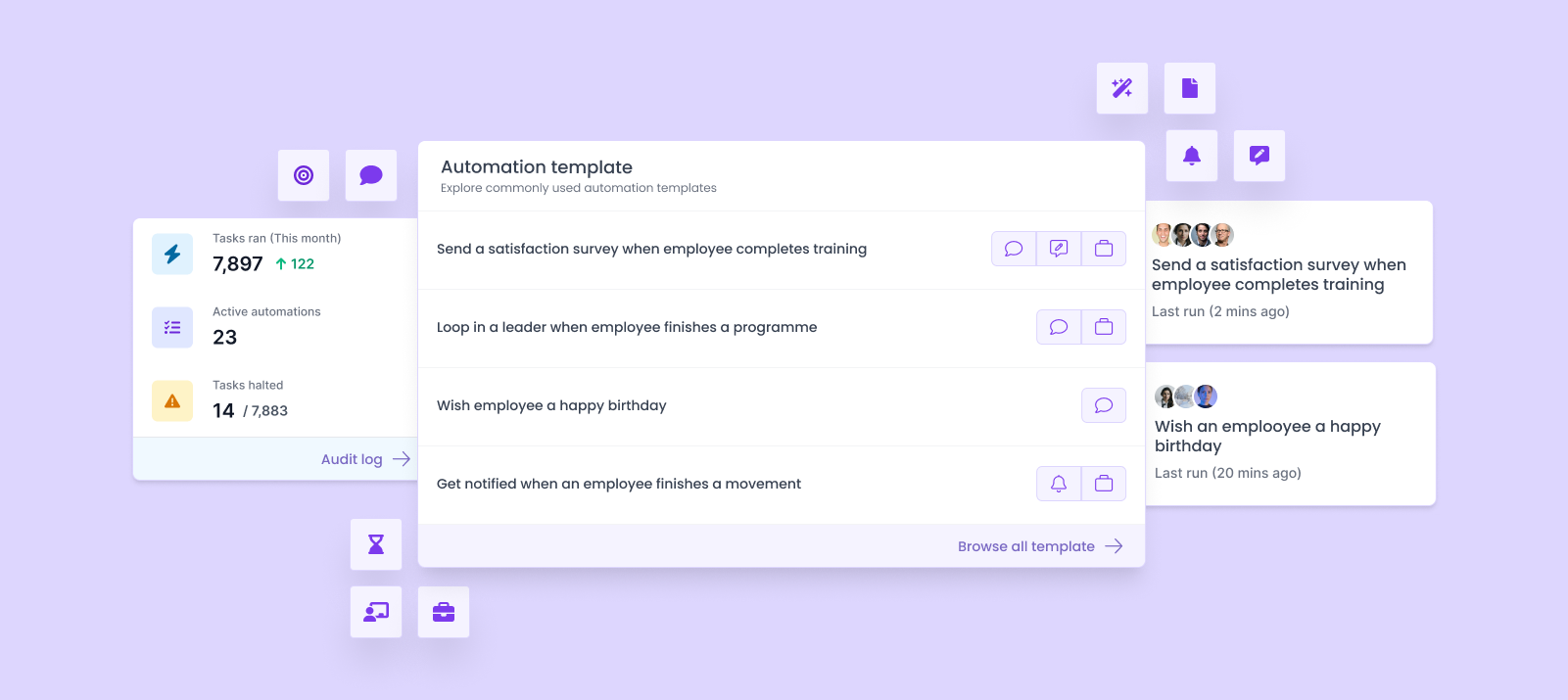

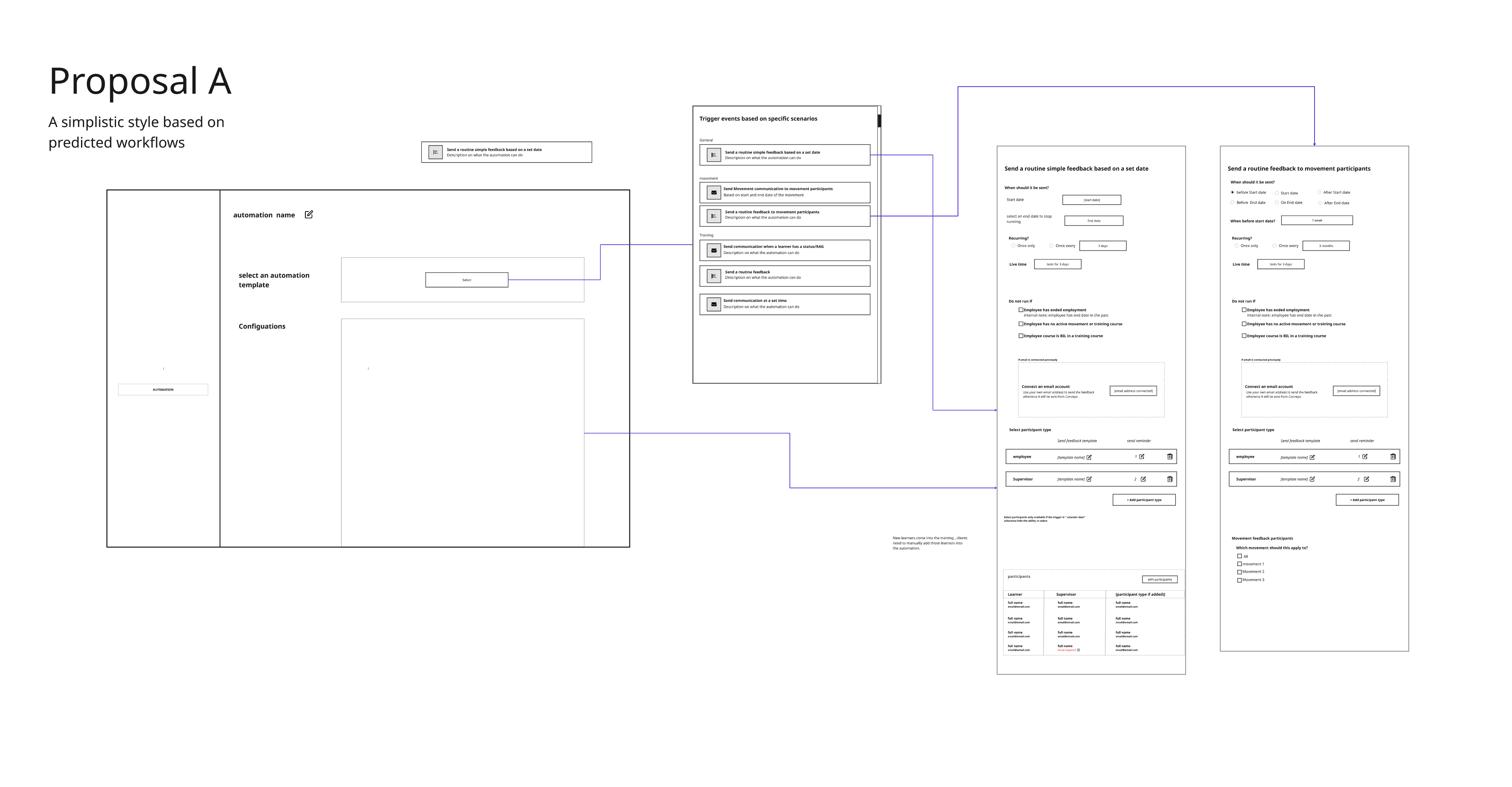

I moved into interface design. I created the first version of the rule builder with clarity in mind, especially for users unfamiliar with logic flows. The layout focused on one trigger, followed by one or more actions, arranged top to bottom. I also designed a template list to help users get started without building from scratch.

The research into admin pain points made it clear that setup and visibility would be critical. Users were already overwhelmed by survey sends, chasing responses, and manually logging actions.That guided a few key decisions:

Templates appear first to support common use cases and reduce decision fatigue

Task counts and error alerts help users trust what’s running without second-guessing

Active/inactive states are clearly labelled so users don’t miss what’s turned off

Bulk visibility and search allow users to manage dozens of automations at scale

To build the trigger system, we mapped recurring admin tasks using client requests and internal tickets. Common events such as training completion or missed reviews were turned into structured trigger types.The UI reflects that structure. Users select a trigger based on existing data like dates or statuses, then choose an action. The layout follows the order these tasks usually happen, making the logic easier to set up and tie back to real use cases.Ask ChatGPT

We considered the impact before building the feature, focusing on tasks that could save time at scale. In the above example, replacing a manual survey send flow reduced effort from 18–41 hours to around 9.5–20. With this structure in place, the same logic can be applied across other clients, teams, and survey types.